Lessons learned from a year of building AI agents

Dec 24, 2025

Lessons learned from a year of building AI agents

What a year it’s been for AI progress! Ilya Sutskever said it best in Dwarkesh's podcast: “You know what’s crazy? That all of this is real.” Current AI capabilities are surreal, and yet getting them to work reliably in an enterprise setting is still very much an engineering discipline.

At Champ, we got a chance to work with a number of customers this past year - from fast growing startups to steady-state scale-ups to large enterprises. Our platform is used by teams across customer support, product operations, trust & safety and developer support. Here are some lessons we’ve learned from building AI agents in 2025.

1) AI impact is very much real, and it’s moving faster than you think

Even if you follow the space closely, the baseline keeps shifting. Models get better, tool calling gets more reliable, costs drop, and suddenly workflows that felt impossible become tractable. If you’re not in the trenches building, it’s impossible to keep up with the progress.

The practical takeaway: design your agent systems assuming the underlying models will improve.

2) Agent building is still too hard for most teams

Building agents is not just “prompting”. It requires setting up secure data access, defining tools, handling edge cases, debugging failures, and maintaining them after shipping.

We can think of building AI agents as equivalent to natural language programming. Many of the challenges of building good software apply to building AI agents too.

3) Most agents use similar LLMs and tool-calling mechanics. Context engineering makes all the difference.

Most agents follow the same loop: a) read state, b) decide next action using LLM, c) call a tool, d) update state, 2) repeat until done.

What differentiates them is context engineering: what you put in the prompt, how you represent state, knowledge retrieval, tool schemas, memory, guardrails, and stop conditions. This is the key difference between agents that work reliably and those that don't.

At Champ AI, we try to put ourselves in the shoes of our customers to truly understand their real processes and edge cases well enough to help them design effective agents.

4) Text-only workflows are basically a solved problem, multi-modal/browser still a challenge

If the only inputs are text based - like chats, emails, documents etc., AI agent performance can be very reliable, provided the right context is shared and guardrails are in place.

Multimodal agents (complex PDFs, voice, video, browser automation) will be the next reliability battleground. The moment you add a browser, you inherit all the chaos of the web: auth flows, popups, rate limits, CAPTCHAs, etc. With voice, it’s dealing with real-world noise, interruptions, accents, communication styles etc.

We are innovating on several techniques to be at the forefront of multi-modal agents.

5) Secure access to enterprise data is one of the biggest bottlenecks

One of our biggest blockers to AI deployment is data access. There’s tons of data at every company that could be leveraged to automate many workflows, but exposing that securely to AI agents is a problem we are all figuring out together.

This requires building secure APIs to expose data to AI agents but many internal data systems are not built for agents, they are built for teams of knowledge workers to operate using web based UIs.

6) Enterprises will have to modernize for AI the same way they modernized for PCs and cloud

Working backwards from a world where a significant amount of knowledge work is expected to be done by AI agents supervised by humans, we still need to collectively figure out how to evolve our systems, processes and tools to make the best use of AI. We're at the start of a similar kind of shift we’ve seen before:

paper ledgers + filing cabinets → digital records

on-prem → cloud

human-only workflows → AI (+human) workflows

Companies that treat “AI readiness” as a real modernization effort will see more of their AI pilots turn from brittle experiments to real value drivers.

7) Agents need performance management, just like humans

If you can’t measure it, you can’t improve it. Practical agent management requires:

eval sets that match real workflows (including hard edge cases)

continuous regression testing when prompts/tools change

tracing and human reviews/escalations for failures

targeted fine-tuning or policy updates when a failure mode repeats

Production agents need an evaluation flywheel, not one-time prompt tweaks.

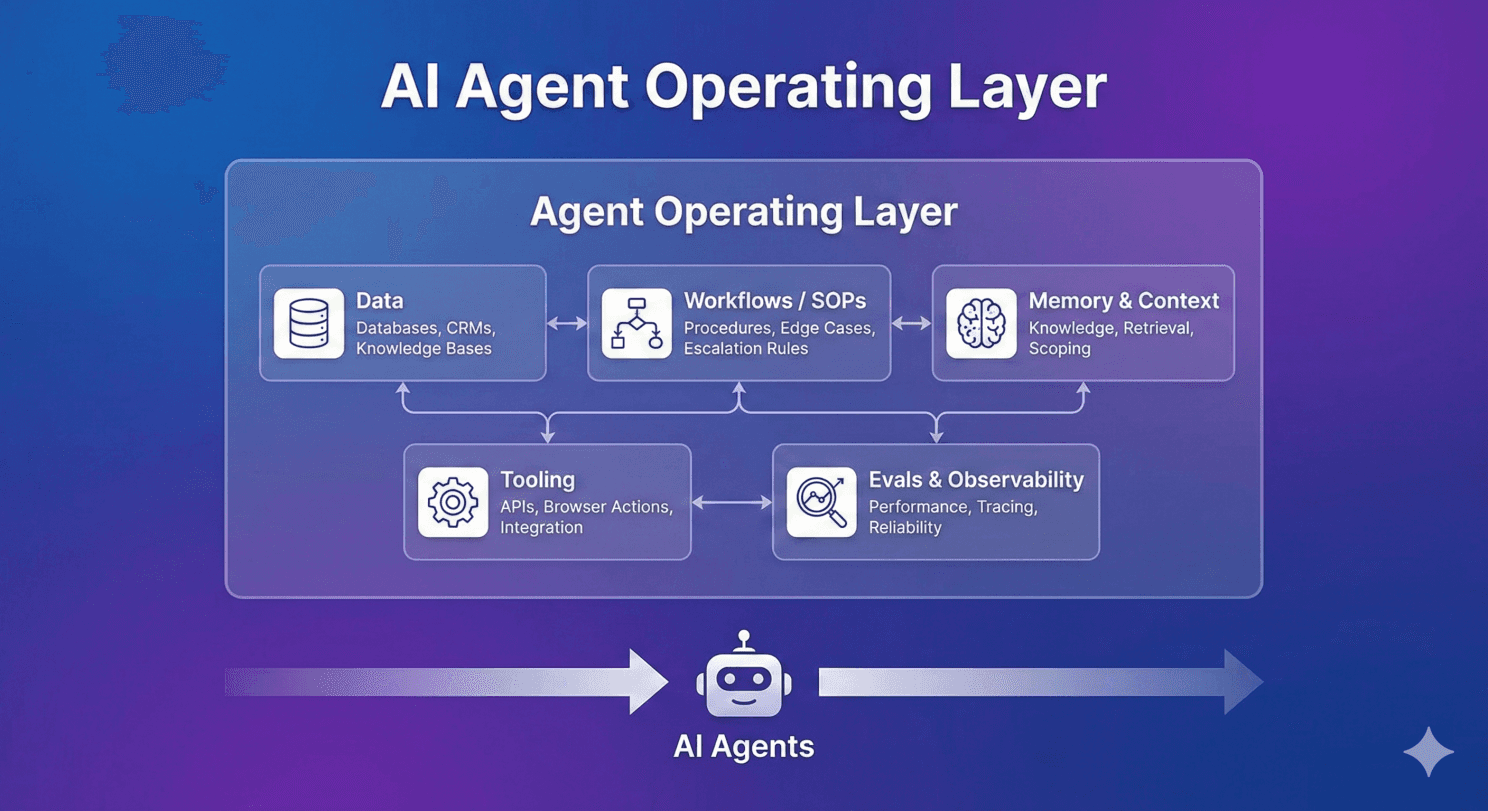

8) The “system of record” for the AI era won’t be just a database. It’ll be the agent operating layer.

As models and infra get commoditized, a lot of “agent capability” starts to look the same. The durable value shifts to proprietary software that makes agents safe, reliable, and cheap to run in the real world.

The layer for agent-driven operations becomes a bundle of:

Data: The current systems of record - databases, CRMs, knowledge bases.

Workflows / SOPs: the canonical definition of “how this work is done,” including edge cases and escalation rules

Memory & context: what the agent should know, what it’s allowed to remember, and how context is retrieved and scoped per task

Tooling: the integration layer (APIs, browser actions, database queries) with clear contracts, permissions, and idempotency

Evals & observability: a continuous way to measure performance, catch regressions, and improve reliability over time

The companies that win will have the best machinery for operating a fleet of agents: deploying changes safely, explaining decisions, tracing failures, and improving performance like an engineering system.

9) We are still in the early innings of pricing models for AI

Seat-based pricing has worked really well for agents that augment human capability. ChatGPT Pro, Cursor, Suno etc are good examples here.

Outcome-based pricing works well when AI agents handle work in the background without needing pro-active human involvement. Champ’s SOP-based process agents, Sierra’s support agents are good examples here.

But as intelligence gets cheaper and value shifts to the agent operating layer, AI is not just about labor replacement/augmentation and that needs to be appropriately priced in.

10) Finally, SaaS is very much not dead

Takes like “SaaS is dead, I can vibe code it in a weekend” and “systems of record are dead” are, in my opinion, very much not baked in reality. Shipping software is never the hard part. Maintaining it is. In the real world:

requirements change constantly

edge cases show up only at volume

security, permissions, and audit expectations expand

integrations break as APIs and schemas evolve

and someone has to be on call when things go wrong

Agents make this more true, not less. They’re a living system: prompts change, tools change, policies change, models shift, and reliability can regress if you’re not measuring it.

So yes, you can hack together a workflow in a weekend. The question is: can you keep it working six months later, across customers, with safety, uptime, and accountability?

Conclusion

We’re still so early. A lot of what looks like “agent magic” today will be table stakes soon, and a lot of what looks impossible will quietly become normal. I’m very much looking forward to another year of insane AI progress and building in the trenches!